Random-digit dialing (RDD) is no longer the gold standard for survey samples. Seasoned researchers recall a time before most U.S. adults owned a cell phone. In fact, it wasn’t really that long ago. As recent as the 1990s, any researcher who wanted to conduct a high-quality survey would automatically conduct a telephone survey, using a random-digit dial (RDD) telephone sample from a reputable provider. Everyone accepted this as the best method of survey research. No questions asked.

Now, the world of survey research is more complex and the methodology choices are no longer clear-cut, and a “gold standard” may no longer exist. According to the latest data released by the U.S. Centers for Disease Control and Prevention(CDC), roughly one-third of households either no longer have (18%) or no longer use (15%) traditional landline telephones. This one fact indicates the non-coverage bias of a landline telephone survey could be larger than an online survey. Assuming an online panel is entirely representative of the online universe (which it is not), its coverage in the U.S. is now 78% compared to 67% for telephone surveys. The bottom line is that there is no longer a straightforward approach to sampling households without requiring special steps to ensure quality.

Online samples are not bullet-proof either. There are certainly many concerns with the representativeness of online panels, including the above mentioned non-coverage bias, self-selection of panelists, and professional respondents. While there is little a researcher can do about non-coverage and self-selection, we now have the tools at our disposal to eliminate professional respondents from our datasets. There are many types of professional respondents who are considered undesirable, including respondents who take too many surveys (hyperactive), respondents who are not fully paying attention to the survey questions (inattentive), and respondents who are providing fraudulent or dishonest answers in an attempt to game the incentive system. To identify these respondents and eliminate them from a dataset, Rockbridge has developed its SafeSample™ methodology.

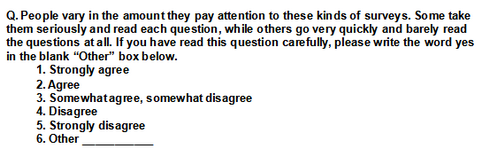

Rockbridge has a new methodology for addressing online panel sample quality: SafeSample™. SafeSample™ is a 5 step approach developed after more than three years of research and testing online sample quality procedures. This methodology employs a mix of tests, trick questions, and data analysis to identify and eliminate problem respondents. For instance, one of the steps to identify hyperactive respondents is to simply ask a question regarding the number of surveys taken in the past 30 days. In addition, a test used to expose inattentive survey takers is the trick question below, originally developed by Harvard University researchers.

And, while there are many tests for unearthing fraudulent respondents, one successful technique is to include a product, such as the telegraph, in a product ownership question that lists many products/services. SafeSample™ also asks respondents to rate the survey itself, so that we are mindful of the burden we place on respondents.

Perhaps the most important step in this process is analyzing the data to determine who is a bad respondent. Interestingly, the research Rockbridge has conducted shows that one bad answer does not make a bad respondent. As such, careful analysis and cleaning of the data ensures that the truly bad data is eliminated while maintaining the good. Through this methodology, Rockbridge continues to lead the way in effective online surveys.